Facebook

| URL |

https://Persagen.com/docs/facebook.html |

| Sources |

Persagen.com | Wikipedia | other sources (cited in situ) |

| Authors |

|

| Date published |

2021-09-20 |

| Curation date |

2021-09-20 |

| Curator |

Dr. Victoria A. Stuart, Ph.D. |

| Modified |

|

| Editorial practice |

Refer here | Dates: yyyy-mm-dd |

| Summary |

Facebook is an American online social media and social networking service owned by Facebook, Inc. As of 2021, Facebook claimed 2.8 billion monthly active users, and ranked seventh in global internet usage. The subject of numerous controversies, Facebook has often been criticized over issues such as user privacy (as with the Cambridge Analytica data scandal), political manipulation (as with the 2016 U.S. elections), mass surveillance, psychological effects such as addiction and low self-esteem, and content such as fake news, conspiracy theories, copyright infringement, and hate speech. Commentators have accused Facebook of willingly facilitating the spread of such content, as well as exaggerating its number of users to appeal to advertisers. |

Self-reported

summary |

|

| Main article |

Meta Platforms, Inc. |

| Key points |

|

| Main article |

|

| Related |

|

|

Show

__*****__

|

|

Show

On 2021-10-28, Facebook, Inc. - the parent organization of the social media and social networking service Facebook (this page) - rebranded as Meta Platforms Inc.

|

| Keywords |

Show

- antitrust lawsuit | disinformation | hacking | header bidding | internet | misinformation | privacy | publisher | social media | social media platform

|

| Named entities |

Show

- 2016 U.S. elections | Cambridge Analytica data scandal | Facebook | Facebook Messenger | Google | Instagram | Internet | Jedi Blue (Google-Facebook antitrust collusion; header bidding) | Meta, Inc. | Meta Platforms, Inc. | Chris Hughes | Andrew Aaron McCollum | Dustin Moskovitz | Eduardo Luiz Saverin | Mark Elliot Zuckerberg | United States

|

| Ontologies |

Show

- Culture - Literature - Science fiction - Persons - Neal Stephenson - Works - Snow Crash

- Culture - Cultural studies - Media culture - Deception - Media manipulation - Propaganda - Propaganda techniques - Disinformation

- Culture - Cultural studies - Media culture - Deception - Media manipulation - Propaganda - Propaganda techniques - Disinformation - News outlets - Facebook

- Culture - Cultural studies - Media culture - Deception - Media manipulation - Propaganda - Propaganda techniques - Disinformation - Fake news

- Culture - Cultural studies - Media culture - Deception - Media manipulation - Psychological manipulation

- Culture - Cultural studies - Media culture - Media - Digital media - Platforms - Facebook

- Culture - Cultural studies - Media culture - Issues - Privacy

- Science - Social sciences - Law - Common law - Torts - Copyright infringement

- Nature - Earth - Geopolitical - Countries - United States - Politics - Issues - Federal elections - Interference

- Science - Issues - Integrity - Fraudulent science - Pseudoscience - Climate change denial - Facebook

- Science - Issues - Integrity - Fraudulent science - Pseudoscience - Conspiracy theories

- Society - Business - Companies - Facebook - Controversies

- Society - Business - Companies - Facebook - Controversies - Antihate Boycotts

- Society - Business - Companies - Meta Platforms Inc.

- Society - Business - Companies - Meta Platforms Inc. - Persons - Mark Zuckerberg

- Society - Business - Companies - Meta Platforms Inc. - Metaverse

- Society - Critical theory - Digital violence - Mass surveillance

- Society - Rights - Human rights - Civil and political rights - Right to protest - Privacy

- Society - Rights - Human rights - Civil and political rights - Right to protest - Privacy - Information privacy

- Society - Issues - Discrimination

- Society - Issues - Discrimination - Racism

- Society - Issues - Disinformation

- Society - Issues - Misinformation

- Society - Issues - Privacy

- Society - Issues - Privacy - Information privacy

- Society - Issues - Privacy - Surveillance

- Society - Issues - Privacy - Surveillance - Commercial - Facebook

- Society - Issues - Privacy - Surveillance - Commercial - Facebook

- Society - Issues - Privacy - Surveillance - Corporate surveillance

- Technology - Internet - Issues - Abuse

- Technology - Internet - Issues - Privacy - Facebook

- Technology - Internet - Issues - Privacy - Facebook Hack 2021

- Technology - Internet - Issues - Surveillance

- Technology - Internet - Social Media - Social networking service - Facebook

|

|

| Founded |

2004-02-04 (Cambridge, Massachusetts, USA) |

| Founders |

|

| CEO |

Mark Elliot Zuckerberg |

| Parent |

Facebook, Inc. |

| Director |

|

| Leader |

|

| Executives |

|

| Board of Directors |

|

| Staff |

|

| Type of site |

- social networking service

- social media

- publisher

|

| EIN (Tax ID) |

|

| Location |

United States |

| Areas served |

Global, except blocking countries |

| Users |

2.85 billion monthly active users (March 2021-03-31) |

| Description |

|

| Revenue |

|

| Expenses |

|

| Net assets |

|

| Known for |

- conspiracy theories

- copyright infringement

- digital surveillance

- disinformation

- erosion of privacy

- fake news

- hate speech

- internet addiction

- mass surveillance

- misinformation

- political interference

- psychological manipulation

- social media

- social networking

|

| Website |

Facebook.com |

This article is a stub [additional content pending ...].

Background

Facebook is an American online social media and social networking service owned by Facebook, Inc.

Founded in 2004 by Mark Elliot Zuckerberg with fellow Harvard College students and roommates Eduardo Luiz Saverin, Andrew Aaron McCollum, Dustin Moskovitz, and Chris Hughes, its name comes from the face book directories often given to American university students. Membership was initially limited to Harvard students, gradually expanding to other North American universities and, since 2006, anyone over 13 years old. As of 2021, Facebook claimed 2.8 billion monthly active users, and ranked seventh in global internet usage. It was the most downloaded mobile app of the 2010s.

Facebook can be accessed from devices with Internet connectivity, such as personal computers, tablets and smartphones. After registering, users can create a profile revealing information about themselves. They can post text, photos and multimedia which are shared with any other users who have agreed to be their "friend" or, with different privacy settings, publicly. Users can also communicate directly with each other with Facebook Messenger, join common-interest groups, and receive notifications on the activities of their Facebook friends and pages they follow.

The subject of numerous controversies, Facebook has often been criticized over issues such as user privacy (as with the Cambridge Analytica data scandal), political manipulation (as with the 2016 U.S. elections), mass surveillance, psychological effects such as addiction and low self-esteem, and content such as fake news, conspiracy theories, copyright infringement, and hate speech. Commentators have accused Facebook of willingly facilitating the spread of such content, as well as exaggerating its number of users to appeal to advertisers.

Facebook's Content Ranking: Spread of Misinformation, Hate Speech, and Violence

[MIT TechnologyReview.com, 2021-10-05] The Facebook whistleblower says its algorithms are dangerous. Here's why. Frances Haugen's testimony at the Senate hearing today raised serious questions about how Facebook's algorithms work - and echoes many findings from our previous investigation.

[ ... snip ... ]

How has Facebook's content ranking led to the spread of misinformation and hate speech?

During her testimony, Frances Haugen repeatedly came back to the idea that Facebook's algorithm incites misinformation, hate speech, and even ethnic violence. "Facebook ... knows - they have admitted in public - that engagement-based ranking is dangerous without integrity and security systems but then not rolled out those integrity and security systems in most of the languages in the world," she told the Senate today. "It is pulling families apart. And in places like Ethiopia it is literally fanning ethnic violence."

Here's what I've written about this previously:

The machine-learning models that maximize engagement also favor controversy, misinformation, and extremism: put simply, people just like outrageous stuff.

Sometimes this inflames existing political tensions. The most devastating example to date is the case of Myanmar, where viral fake news and hate speech about the Rohingya Muslim minority escalated the country's religious conflict into a full-blown genocide. Facebook admitted in 2018, after years of downplaying its role, that it had not done enough "to help prevent our platform from being used to foment division and incite offline violence."

As Haugen mentioned, Facebook has also known this for a while. Previous reporting has found that it's been studying the phenomenon since at least 2016.

In an internal presentation from that year, reviewed by the Wall Street Journal, a company researcher, Monica Lee, found that Facebook was not only hosting a large number of extremist groups but also promoting them to its users: "64% of all extremist group joins are due to our recommendation tools," the presentation said, predominantly thanks to the models behind the "Groups You Should Join" and "Discover" features.

In 2017, Chris Cox, Facebook's longtime chief product officer, formed a new task force to understand whether maximizing user engagement on Facebook was contributing to political polarization. It found that there was indeed a correlation, and that reducing polarization would mean taking a hit on engagement. In a mid-2018 document reviewed by the Journal, the task force proposed several potential fixes, such as tweaking the recommendation algorithms to suggest a more diverse range of groups for people to join. But it acknowledged that some of the ideas were "antigrowth." Most of the proposals didn't move forward, and the task force disbanded.

Facebook User Interaction With Borderline & Questionable Content

This subsection is germane to the Climate Change Denial: Facebook article, in which the following statements are attributed to Facebook CEO Mark Zuckerberg."

"Mark Zuckerberg is on record admitting that sensationalist content and misinformation on Facebook is good business."

"Mark Zuckerberg is on record saying that content that tends to be controversial tends to be more shareable, and our feeling is that if you don't do anything about it, it could get worse." -- Stop Funding Heat researcher Sean Buchan

Lacking a Facebook account, the source for those assertions appear to be from a 2018-11-15 Facebook "note" (blog post) by Mark Zuckerberg, "A Blueprint for Content Governance and Enforcement" [Archive.today snapshot

| local copy | alternate URL: https://www.facebook.com/notes/751449002072082/].

The statements in question appear to be in the "Discouraging Borderline Content" subsection of that document, reproduced here ["MZ" = Mark Zuckerberg].

Discouraging Borderline Content

[MZ] "One of the biggest issues social networks face is that, when left unchecked, people will engage disproportionately with more sensationalist and provocative content. This is not a new phenomenon. It is widespread on cable news today and has been a staple of tabloids for more than a century. At scale it can undermine the quality of public discourse and lead to polarization. In our case, it can also degrade the quality of our services.

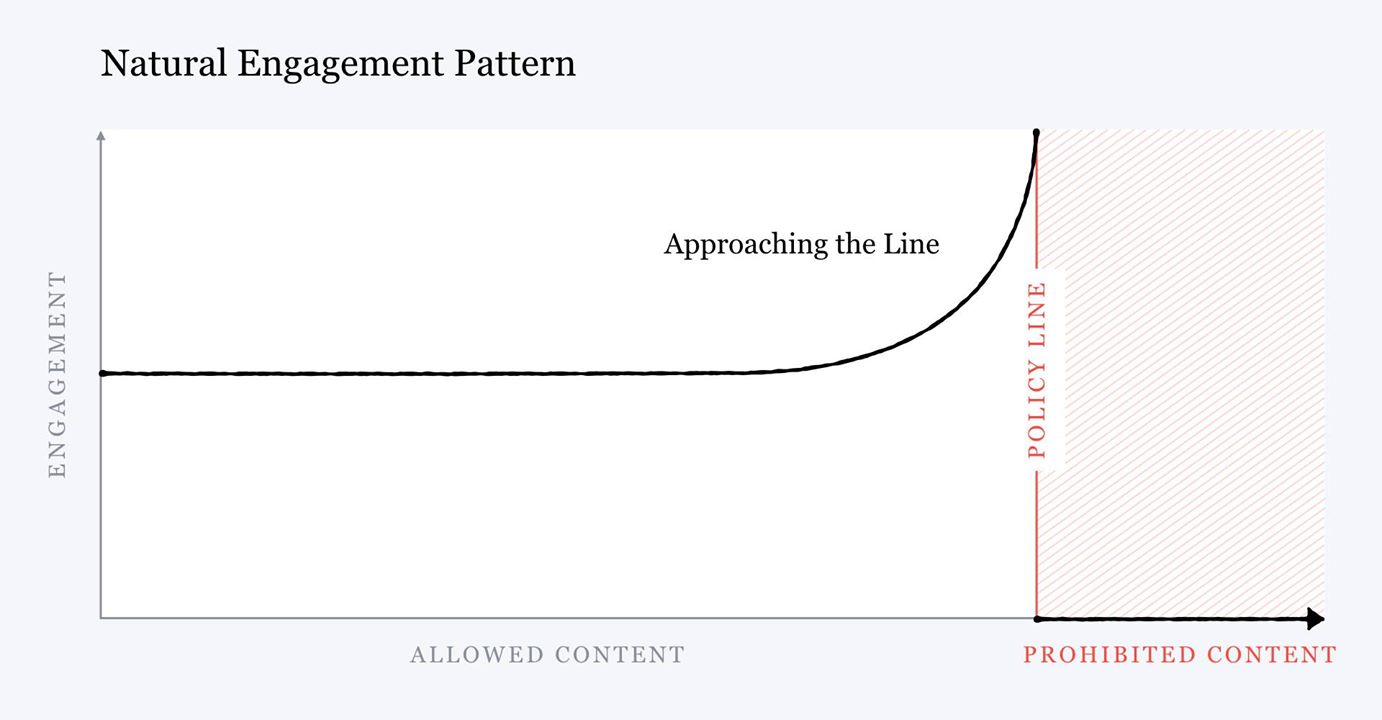

[MZ] "Our research suggests that no matter where we draw the lines for what is allowed, as a piece of content gets close to that line, people will engage with it more on average -- even when they tell us afterwards they don't like the content.

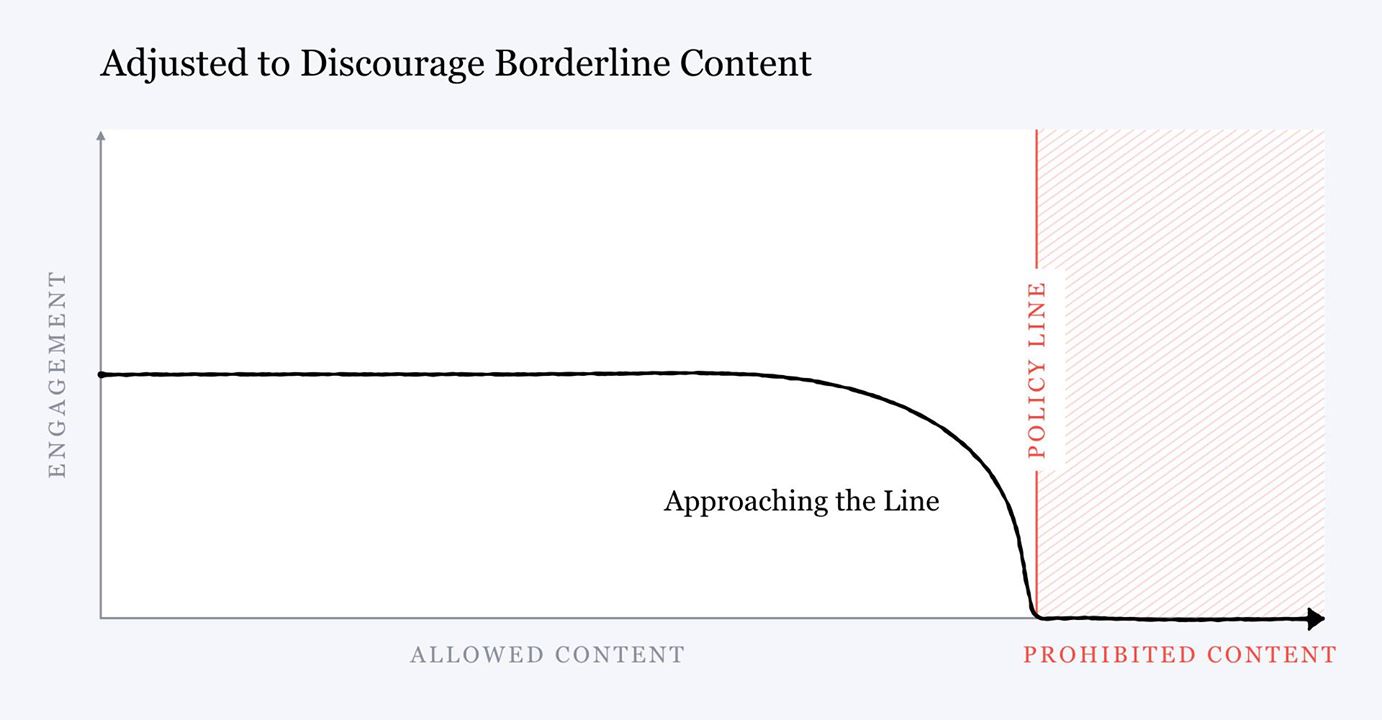

[MZ] "This is a basic incentive problem that we can address by penalizing borderline content so it gets less distribution and engagement. By making the distribution curve look like the graph below where distribution declines as content gets more sensational, people are disincentivized from creating provocative content that is as close to the line as possible.

[MZ] "This process for adjusting this curve is similar to what I described above for proactively identifying harmful content, but is now focused on identifying borderline content instead. We train AI systems to detect borderline content so we can distribute that content less.

[MZ] "The category we're most focused on is click-bait and misinformation. People consistently tell us these types of content make our services worse -- even though they engage with them. As I mentioned above, the most effective way to stop the spread of misinformation is to remove the fake accounts that generate it. The next most effective strategy is reducing its distribution and virality. (I wrote about these approaches in more detail in my note on Preparing for Elections.)

[MZ] "Interestingly, our research has found that this natural pattern of borderline content getting more engagement applies not only to news but to almost every category of content. For example, photos close to the line of nudity, like with revealing clothing or sexually suggestive positions, got more engagement on average before we changed the distribution curve to discourage this. The same goes for posts that don't come within our definition of hate speech but are still offensive.

[MZ] "This pattern may apply to the groups people join and pages they follow as well. This is especially important to address because while social networks in general expose people to more diverse views, and while groups in general encourage inclusion and acceptance, divisive groups and pages can still fuel polarization. To manage this, we need to apply these distribution changes not only to feed ranking but to all of our recommendation systems for things you should join.

[MZ] "One common reaction is that rather than reducing distribution, we should simply move the line defining what is acceptable. In some cases this is worth considering, but it's important to remember that won't address the underlying incentive problem, which is often the bigger issue. This engagement pattern seems to exist no matter where we draw the lines, so we need to change this incentive and not just remove content.

[MZ] "I believe these efforts on the underlying incentives in our systems are some of the most important work we're doing across the company. We've made significant progress in the last year, but we still have a lot of work ahead.

[MZ] "By fixing this incentive problem in our services, we believe it'll create a virtuous cycle: by reducing sensationalism of all forms, we'll create a healthier, less polarized discourse where more people feel safe participating."

Facebook Papers (Facebook Files)

Wikipedia: 2021 Facebook leak.

[CommonDreams.org, 2021-10-25] Profits Before People: The Facebook Papers Expose Tech Giant Greed. "This industry is rotten at its core," said one critic, "and the clearest proof of that is what it's doing to our children." | "It's time for immediate action to hold the company accountable for the many harms it's inflicted on our democracy." | "Kids don't stand a chance against the multibillion dollar Facebook machine, primed to feed them content that causes severe harm to mental and physical well being."

Internal documents dubbed "The Facebook Papers" were published widely Monday [2021-10-25] by an international consortium of news outlets who jointly obtained the redacted materials recently made available to the U.S. Congress by company whistleblower Frances Haugen. The papers were shared among 17 U.S. outlets as well as a separate group of news agencies in Europe, with all the journalists involved sharing the same publication date but performing their own reporting based on the documents.

According to [source URL] the Financial Times, the "thousands of pages of leaked documents paint a damaging picture of a company that has prioritized growth" over other concerns. And The Washington Post concluded that the choices made by founder and CEO Mark Zuckerberg, as detailed in the revelations, "led to disastrous outcomes" for the social media giant and its users.

From an overview of the documents and the reporting project by the The Associated Press:

"The papers themselves are redacted versions of disclosures that Haugen has made over several months to the Securities and Exchange Commission, alleging Facebook was prioritizing profits over safety and hiding its own research from investors and the public.

"These complaints cover a range of topics, from its efforts to continue growing its audience, to how its platforms might harm children, to its alleged role in inciting political violence. The same redacted versions of those filings are being provided to members of Congress as part of its investigation. And that process continues as Haugen's legal team goes through the process of redacting the SEC filings by removing the names of Facebook users and lower-level employees and turns them over to Congress."

One key revelation highlighted by the Financial Times is that Facebook has been perplexed by its own algorithms; another key revelation was that Facebook "fiddled while the Capitol burned" during the January 6th 2021 insurrection [2021 United States Capitol attack] staged by loyalists to former President Donald Trump trying to halt the certification of last year's election.

[ ... snip ... ]

[theAtlantic.com, 2021-10-25] How Facebook Fails 90 Percent of Its Users. Internal documents show the company routinely placing public-relations, profit, and regulatory concerns over user welfare. And if you think it's bad here, look beyond the U.S.

[ ... snip ... ]

In Their Own Words

After a prominent Indian politician's call to kill Muslims was left on Facebook for months, employees took to Workplace (the company's internal message board) to air their frustrations with the way Facebook handles hate speech in that country. Editor's note; the following messages are direct quotes from Facebook employees. We've reproduced screenshots to make them more legible.

"The thing that concerns me is that these posts were not from some small account with 50 people, they were from a single 'official' political source. As there are [a] limited number of politicians, I find it inconceivable that we don't have even basic key word detection set up to catch this sort of thing. After all cannot be proud as a company if we continue to let such barbarism flourish on our network."

"Overall this incident is just sad - it breaks trust and makes it that much harder to build community around the world. I hope we can learn from it and continue to change our ways to be more consistent and not give hate speech a home."

"For me, the concern is how I think we showed up on the matter of leaving such hateful content up on the platform. When there is a post from a prominent account like this one, there are comments and it gets shared - in this case, I saw examples of content inciting violence both in the posts, videos as well as comments. The number of likes were upwards of 1000s, comments and shares were in [the] 100s. Each such post does immense damage ... The fact that such content from prominent accounts ... remains on our platforms ... does feel inadequate."

"I have a very basic question that the instances pointed out in the article are very similar in nature and despite having such strong processes around hate speech, how come there are so many instances that we have failed? It does speak on the efficacy of the process."

Reading these documents is a little like going to the eye doctor and seeing the world suddenly sharpen into focus. In the United States, Facebook has facilitated the spread of misinformation, hate speech, and political polarization. It has algorithmically surfaced false information about conspiracy theories and vaccines, and was instrumental in the ability of an extremist mob to attempt a violent coup at the [United States] Capitol [2021 United States Capitol attack]. That much is now painfully familiar.

But these documents show that the Facebook we have in the United States is actually the platform at its best. It's the version made by people who speak our language and understand our customs, who take our civic problems seriously because those problems are theirs too. It's the version that exists on a free internet, under a relatively stable government, in a wealthy democracy. It's also the version to which Facebook dedicates the most moderation resources. Elsewhere, the documents show, things are different. In the most vulnerable parts of the world - places with limited internet access, where smaller user numbers mean bad actors have undue influence - the trade-offs and mistakes that Facebook makes can have deadly consequences.

[ ... snip ... ]

[WSJ.com, 2021-10-01] the Facebook Files. | [discussion, 2021-10-01+] Hacker News

Facebook, Inc. [now: Meta Platforms, Inc.] knows, in acute detail, that its platforms are riddled with flaws that cause harm, often in ways only the company fully understands. That is the central finding of a Wall Street Journal series, based on a review of internal Facebook documents, including research reports, online employee discussions and drafts of presentations to senior management.

Time and again, the documents show, Facebook's researchers have identified the platform's ill effects. Time and again, despite congressional hearings, its own pledges and numerous media exposés, the company didn't fix them. The documents offer perhaps the clearest picture thus far of how broadly Facebook's problems are known inside the company, up to the chief executive himself.

Facebook Files sections [content omitted, here].

[NPR.org, 2021-10-25] The Facebook Papers: What you need to know about the trove of insider documents. | Editor's note: Facebook is among NPR's recent financial supporters.

[CTVNews.ca, 2021-10-25] Whistleblower Frances Haugen says Facebook making online hate worse. Facebook whistleblower Frances Haugen told British lawmakers Monday [2021-10-25] that the social media giant stokes online hate and extremism, fails to protect children from harmful content and lacks any incentive to fix the problems, providing momentum for efforts by European governments working on stricter regulation of tech companies. While her testimony echoed much of what she told the U.S. Senate this month, her in-person appearance drew intense interest from a British parliamentary committee that is much further along in drawing up legislation to rein in the power of social media companies. It comes the same day that Facebook is set to release its latest earnings and that The Associated Press and other news organizations started publishing stories based on thousands of pages of internal company documents she obtained. ... | Amid fallout from the Facebook Papers showing it failed to address the harms its social network has created around the world, Facebook on Monday [2021-10-25] reported higher profit for the latest quarter, buoyed by strong advertising revenue.

[Financial Times: FT.com, 2021-10-25] Four revelations from the Facebook Papers. Thousands of pages of leaked documents paint a damaging picture of company that has prioritised growth.

[Financial Times: FT.com, 2021-10-25] Employees pleaded with Facebook to stop letting politicians bend rules. Documents obtained by whistleblower suggest executives intervened to let contentious posts stay up. | Discussion: Hacker News: 2021-10-25

[CBC.ca, 2021-10-25] Facebook knew about and failed to police abusive content globally: documents. Efforts to keep Facebook products from being used for hate, misinformation have trailed its growth.

[NPR.org, 2021-10-23] Facebook dithered in curbing divisive user content in India. Facebook in India has been selective in curbing hate speech, misinformation and inflammatory posts, particularly anti-Muslim content, according to leaked documents obtained by The Associated Press, even as its own employees cast doubt over the company's motivations and interests. From research as recent as March of this year to company memos that date back to 2019, the internal company documents on India highlight Facebook's constant struggles in quashing abusive content on its platforms in the world's biggest democracy and the company's largest growth market. Communal and religious tensions in India have a history of boiling over on social media and stoking violence. ...

[theVerge.com, 2021-10-22] A new Facebook whistleblower has come forward with more allegations. The anonymous person says the company repeatedly put profits first.

A second Facebook whistleblower has come forward with a new set of allegations about how the social media platform does business. First reported by the The Washington Post, the person is a former member of Facebook's integrity team and says the company puts profits before efforts to fight hate speech and misinformation on its platform.

In the affidavit, copies of which were provided to The Verge, the whistleblower alleges, among other things, that a former Facebook communications official dismissed concerns about interference by Russia in the 2016 presidential election, assisted unwittingly by Facebook. Tucker Bounds said, according to the affidavit, that the situation would be "a flash in the pan. Some legislators will get pissy. And then in a few weeks they will move on to something else. Meanwhile we are printing money in the basement and we are fine."

The whistleblower alleged differences between Facebook's public statements and internal decision-making in other areas. They say that the Internet.org project to connect people in the "developing world" had internal messaging that the goal was to give Facebook an impenetrable foothold and become the "sole source of news" so they could harvest data from untapped markets.

[ ... snip ... ]

[theConversation.com, 2021-10-18] Why Facebook and other social media companies need to be reined in. In 2021-09, the Wall Street Journal released the Facebook Files. Drawing on thousands of documents leaked by whistle blower and former employee Frances Haugen, the Facebook Files show that the company knows their practices harm young people, but fails to act, choosing corporate profit over public good. The Facebook Files are damning for the company, which also owns Instagram and WhatsApp. However, it isn't the only social media company that compromises young people's internationally protected rights and well-being by prioritizing profits. As researchers and experts on children's rights, online privacy and equality and the online risks, harms and rewards that young people face, the news over the past few weeks didn't surprise us. ... | Harvested personal data | Surveillance capitalism | Removing toxic content hurts the bottom line

[theNation.com, 2021-10-18] Mark Zuckerberg Knows Exactly How Bad Facebook Is. Whistleblower testimony proves the social media giant is harmful and dishonest, and can't be trusted to regulate itself.

[JacobinMag.com, 2021-10-09] Facebook Harms Its Users Because That's Where Its Profits Are. Facebook has been the target of an unprecedented flood of criticism in recent months - and rightly so. But too many critics seem to forget that the company is driven to do bad things by its thirst for profit, not by a handful of mistaken ideas. ...

[CTVNews.ca, 2021-10-09] Philippine Nobel winner Ressa calls Facebook 'biased against facts'. 2021 Nobel Peace Prize co-winner Maria Ressa used her new prominence to criticize Facebook as a threat to democracy, saying the social media giant fails to protect against the spread of hate and disinformation and is "biased against facts." The veteran journalist and head of Philippine news site Rappler told Reuters in an interview after winning the award that Facebook's algorithms "prioritize the spread of lies laced with anger and hate over facts." ... Ressa shared the Nobel with Russian journalist Dmitry Muratov on Friday [2021-10-08], for what the committee called braving the wrath of the leaders of the Philippines and Russia to expose corruption and misrule, in an endorsement of free speech under fire worldwide. ...

[NPR.org, 2021-10-05] Watch Live: Whistleblower tells Congress Facebook products harm kids and democracy.

[CTVNews.ca, 2021-10-05] Ex-Facebook employee says products hurt kids, fuel division

A former Facebook data scientist told U.S. Congress on Tuesday [2021-10-04] that the social network giant's products harm children and fuel polarization in the U.S. while its executives refuse to make changes because they elevate profits over safety. Frances Haugen testified to the Senate Commerce Subcommittee on Consumer Protection. She is accusing the company of being aware of apparent harm to some teens from Instagram and being dishonest in its public fight against hate and misinformation.

Frances Haugen has come forward with a wide-ranging condemnation of Facebook, buttressed with tens of thousands of pages of internal research documents she secretly copied before leaving her job in the company's civic integrity unit. She also has filed complaints with federal authorities alleging that Facebook's own research shows that it amplifies hate, misinformation and political unrest, but the company hides what it knows.

Haugen says she is speaking out because of her belief that "Facebook's products harm children, stoke division and weaken our democracy." "The company's leadership knows how to make Facebook and Instagram safer but won't make the necessary changes because they have put their astronomical profits before people," she says in her written testimony prepared for the hearing. "Congressional action is needed. They won't solve this crisis without your help." After recent reports in The Wall Street Journal based on documents she leaked to the newspaper raised a public outcry, Haugen revealed her identity in a CBS "60 Minutes" interview aired Sunday night [2021-10-03]. She insisted that "Facebook, over and over again, has shown it chooses profit over safety."

[ ... snip ... ]

[CBC.ca, 2021-10-03] Facebook whistleblower alleges social network fed U.S. Capitol riot. Former product manager appears in 60 Minutes interview, as top exec derides allegations as 'misleading.'

[OpenSecrets.org, 2021-10-01] Facebook maintained big lobbying expenses ahead of Senate hearing on teen social media use.

Senators from both parties levied criticism against Facebook at a consumer protection subcommittee hearing Thursday [2021-09-30], questioning the company's global head of safety, Antigone Davis, about Instagram's effects on teenagers. Instagram is owned by Facebook.

The new scrutiny comes after months of bipartisan legislative talks to limit "Big Tech" and after Facebook spent $9.5 million on federal lobbying in the first half of 2021, the second most of all Big Tech companies. In 2020, Facebook spent more on lobbying than any Big Tech company, totaling $19.6 million. That year, Facebook ranked sixth in lobbying expenditures among all registered lobbying clients.

The hearing was set in motion after The Wall Street Journal published internal Facebook documents showing the social media giant's research had identified harmful mental health effects of Instagram on teenagers.

Facebook announced Monday it would pause its work on creating a kids version of the photo sharing app, in light of the document leak. The company has largely skirted blame for the issue, arguing that it had made changes to limit harm and that many teen users reported a positive experience with the app.

The internal documents said that Instagram made "body image issues worse for one in three teen girls." Among teens who reported suicidal thoughts, 13% of British users and 6% of American users traced those thoughts to Instagram, according to Facebook's research documents.

[ ... snip ... ]

[CBC.ca, 2021-09-30] U.S. senators grill Facebook exec about Instagram's potential harm to teen girls. Whistleblower leaked internal research studying links between Instagram, peer pressure and body image.

United States Senators fired a barrage of criticism Thursday [2021-09-30] at a Facebook executive over the social-networking giant's handling of internal research on how its Instagram photo-sharing platform can harm teens. The lawmakers accused Facebook of concealing the negative findings about Instagram and demanded a commitment from the company to make changes.

During testimony before a Senate commerce subcommittee, Antigone Davis [local copy], Facebook's head of global safety, defended Instagram's efforts to protect young people using its platform. She disputed the way a recent newspaper story describes what the research shows. "We care deeply about the safety and security of the people on our platform," Davis said. "We take the issue very seriously.... We have put in place multiple protections to create safe and age-appropriate experiences for people between the ages of 13 and 17."

Sen. Richard Blumenthal, a Democrat from Connecticut, the subcommittee chairman, wasn't convinced. "I don't understand how you can deny that Instagram is exploiting young users for its own profit," Blumenthal told Davis.

[ ... snip ... ]

[WSJ.com, 2021-09-29] Facebook's Documents About Instagram and Teens, Published. The Senate holds a hearing Thursday [2021-09-30] about the social network's impact, prompted by The Wall Street Journal's coverage.

Facebook, Inc. [now: Meta Platforms, Inc.] is scheduled to testify at a Senate hearing on Thursday about its products' effects on young people's mental health. The hearing in front of the Commerce Committee's consumer-protection subcommittee was prompted by a mid-September 2021 article in The Wall Street Journal. Based on internal company documents, it detailed Facebook's internal research on the negative impact of its Instagram app on teen girls and others.

Six of the documents that formed the basis of the Instagram article are published below. A person seeking whisteblower status has provided these documents to Congress. Facebook published two of these documents earlier Wednesday [2021-09-29].

The Instagram article was part of a series called the the Facebook Files, which was based on research reports, online employee discussions and drafts of presentations to senior management, among other company communications. It revealed how clearly Facebook knows its platforms are flawed.

[ ... snip ... ]

This article is a stub [additional content pending ...].

Jedi Blue: Google-Facebook Antitrust Collusion

[Forbes.com, 2021-01-19] . The Jedi Blue case, exposed by The Wall Street Journal and followed up on [source] by The New York Times, is a clear example of the abuse of Google and Facebook's dominant positions, and definitive proof as to why the tech giants need regulating. It's pretty much a textbook case of everything that can go wrong an industry. What is Jedi Blue? Basically a quid-pro-quo scheme that starts with Google's 2007 acquisition of DoubleClick, and ends with Facebook, in 2018, agreeing not to challenge Google's advertising business in return for a very special treatment in Google's ad auctions. ...

Meta Platforms Inc. | Metaverse

[JacobinMag.com, 2021-10-28] Facebook Is Now Meta. And It Wants to Monetize Your Whole Existence. Mark Zuckerberg's turn toward the "metaverse" claims to put an extra digital layer on top of the real world. But 's new Meta brand isn't augmenting your reality - it just wants to suck more money out of it. | "Welcome to the Zuckerverse - a place nobody asked for but in which we may soon all be spending a lot of time." | "Meta wants to extend its reach from a mere global social network to become the digital infrastructure of everyday life." | "The end goal for Meta is that it is no longer a service you use, but instead, the infrastructure upon which you live." | "This isn't just about collecting data, it's about owning the servers and the digital worlds."

You log on, and you're herded into a virtual bar to listen to your boss telling jokes. Meanwhile, a metaverse-first real estate company is selling off overpriced property in a virtual London, and gamers are competing for non-fungible tokens. Welcome to the Zuckerverse - a place nobody asked for but in which we may soon all be spending a lot of time.

On Thursday [2021-10-28], Facebook changed its name to Meta, as part of a broader shift toward the so-called metaverse - a network of interconnected experiences partly accessed through virtual reality (VR) headsets and augmented reality (AR) devices. In Zuckerberg's own words, "you can think about the metaverse as an embodied internet, where instead of just viewing content - you are in it." The most recognizable examples of this in action are virtual office meetings with VR goggles, playing games in an expansive online universe, and accessing a digital layer on top of the real world through AR.

As owner of Facebook, Instagram, WhatsApp, and the virtual reality firm Oculus, the holding company now known as Meta plans to create an interconnected world in which our work, life, and leisure all take place on its infrastructure - monetizing all aspects of our lives. For now, this is still the stuff of fantasy. Yet it's also the fantasy of one of the most powerful men in the world - and for this reason, it deserves our attention.

In an influential essay, venture capitalist Matthew Ball writes, "the Metaverse will be a place in which proper empires are invested in and built, and where these richly capitalized businesses can fully own a customer, control APIs/data, unit economics, etc." Which does sound a little creepy . . .

Meta is hoping that, by building hype around it, others will be encouraged to follow in developing the project. It's like building a post office and a store and calling it a city. The hope is to get enough companies on board with its project that, soon enough, we will all be using it - whether we like it or not.

[ ... snip ... ]

[MIT TechnologyReview.com, 2021-11-16] The metaverse is the next venue for body dysmorphia online. Some people are excited to see realistic avatars that look like them. Others worry it might make body image issues even worse.

[theVerge.com, 2021-11-12] Meta CTO thinks bad metaverse moderation could pose an "existential threat". Meta aims for "Disney" safety, but probably won't reach it.

Meta (formerly Facebook) CTO Andrew Bosworth warned employees that creating safe virtual reality experiences was a vital part of its business plan - but also potentially impossible at a large scale.

In an internal memo [Archive.today snapshot | local copy] seen by Financial Times, Andrew Bosworth apparently said he wanted Meta virtual worlds to have "almost Disney levels of safety" [The Walt Disney Company], although spaces from third-party developers could have looser standards than directly Meta-built content. Harassment or other toxic behavior could pose an "existential threat" to the company's plans for an embodied future internet if it turned mainstream consumers off virtual reality (VR).

At the same time, Andrew Bosworth said policing user behavior "at any meaningful scale is practically impossible," Financial Times reporter Hannah Murphy later tweeted that Bosworth was citing Masnick's Impossibility Theorem: a maxim, coined by Techdirt founder Mike Masnick, that says "content moderation at scale is impossible to do well." (Masnick's writing notes that this isn't an argument against pushing for better moderation, but large systems will "always end up frustrating very large segments of the population.")

Andrew Bosworth apparently suggests that Meta could moderate spaces like its Horizon Worlds VR platform using a stricter version of its existing community rules, saying VR or metaverse moderation could have "a stronger bias towards enforcement along some sort of spectrum of warning, successively longer suspensions, and ultimately expulsion from multi-user spaces."

While the full memo isn't publicly available, Andrew Bosworth posted a blog entry alluding to it later in the day. The post, titled "Keeping people safe in VR and beyond," references several of Meta's existing VR moderation tools. That includes letting people block other users in VR, as well as an extensive Horizon surveillance system for monitoring and reporting bad behavior. Meta has also pledged $50 million for research into practical and ethical issues around its metaverse plans.

As Financial Times notes, Meta's older platforms like Facebook and Instagram have been castigated for serious moderation failures, including slow and inadequate responses to content that promoted hate and incited violence. The company's recent rebranding offers a potential fresh start, but as the memo notes, VR and virtual worlds will likely face an entirely new set of problems on top of existing issues.

"We often have frank conversations internally and externally about the challenges we face, the trade-offs involved, and the potential outcomes of our work," Andrew Bosworth wrote in the blog post. "There are tough societal and technical problems at play, and we grapple with them daily."

[NPR.org, 2021-10-28] What the metaverse is and how it will work.

The term "metaverse" is the latest buzzword to capture the tech industry's imagination - so much so that one of the best-known internet platforms is rebranding to signal its embrace of the futuristic idea. Facebook CEO Mark Zuckerberg's Thursday announcement that he's changing his company's name to Meta Platforms Inc., or Meta for short, might be the biggest thing to happen to the metaverse since science fiction writer Neal Stephenson coined the term for his 1992 novel "Snow Crash."

[ ... snip ... ]

[theAtlantic.com, 2021-10-21] The Metaverse Is Bad. It is not a world in a headset but a fantasy of power.

In science fiction, the end of the world is a tidy affair. Climate collapse or an alien invasion drives humanity to flee on cosmic arks, or live inside a simulation. Real-life apocalypse is more ambiguous. It happens slowly, and there's no way of knowing when the Earth is really doomed. To depart our world, under these conditions, is the same as giving up on it.

And yet, some of your wealthiest fellow earthlings would like to do exactly that. Elon Musk, Jeff Bezos, and other purveyors of private space travel imagine a celestial paradise where we can thrive as a "multiplanet species." That's the dream of films such as Interstellar and WALL-E. Now comes news that Mark Zuckerberg has embraced the premise of The Matrix, that we can plug ourselves into a big computer and persist as flesh husks while reality decays around us. According to a report this week from The Verge, the Facebook chief may soon rebrand his company to mark its change in focus from social media to "the metaverse."

In a narrow sense, this phrase refers to . More broadly, though, it's a fantasy of power and control.

[ ... snip ... ]

[CBC.ca, 2021-10-20] Facebook to rebrand itself and focus on the metaverse. Company under fire seeking to reorganize the way Google did with Alphabet parent company.

Facebook Inc., under fire from regulators and lawmakers over its business practices, is planning to rebrand itself with a new group name that focuses on the metaverse, the Verge reported on Tuesday [2021-10-19]. The name change will be announced next week, according to technology blog The Verge, which cited a source with direct knowledge of the matter.

Facebook CEO Mark Zuckerberg has been talking up the metaverse, a digital world where people can move between different devices and communicate in a virtual environment, since July. The group has invested heavily in virtual reality and augmented reality, developing hardware such as its Oculus VR headsets and working on AR glasses and wristband technologies.

The move would likely position the flagship app as one of many products under a parent company overseeing brands such as Instagram and WhatsApp, according to the report. Google adopted such a structure when it reorganized into a holding company called Alphabet in 2015.

[ ... snip ... ]

[JacobinMag.com, 2021-09-25] Mark Zuckerberg's "Metaverse" Is a Dystopian Nightmare. The Facebook founder intends to usher in a new era of the internet where there's no distinction between the virtual and the real - and no logging off. | "If the Metaverse sounds like something ripped from the pages of a science-fiction novel, that's because it was." | The Gargoylification of Society | "For gargoyles, who inhabit the Metaverse at all times, there is no 'logging on' and 'logging off.'" | "Ever wonder why we need the word 'lived' as a modifier placed before 'experience' now?" | "Facebook's own history proves that it's much easier to colonize minds than Mars."

[MatthewBall.vc, 2020-01-13] The Metaverse: What It Is, Where to Find it, Who Will Build It, and Fortnite. | "Note: This piece was written in January 2020. In June 2021, I released a nine-part update, 'The Metaverse Primer'." -- Matthew Ball

Facebook and Climate Change Denial

See main article: Climate Change Denial: Facebook

This article is a stub [additional content pending ...].

Additional Reading

[BMJ.com, 2022-01-19] Facebook versus The BMJ: when fact checking goes wrong. The BMJ has locked horns with Facebook and the gatekeepers of international fact checking after one of its investigations was wrongly labelled with "missing context" and censored on the world's largest social network. Rebecca Coombes and Madlen Davies report. | Discussion: Hacker News: 2022-01-20

[BMJ.com, 2021-12-17] Open letter from The BMJ to Mark Zuckerberg

On 2021-12-17 the The BMJ (British Medical Journal) issued an open letter to Mark Zuckerberg, harshly criticizing Facebook's and Lead Stories' inaccurate and misleading representations of The BMJ's investigative reporting on the COVID-19 pandemic.

"Readers were directed to a "fact check" performed by a Facebook contractor named Lead Stories. ... We find the "fact check" performed by Lead Stories to be inaccurate, incompetent and irresponsible. ... We are aware that The BMJ is not the only high quality information provider to have been affected by the incompetence of Meta's fact checking regime. ... Rather than investing a proportion of Meta's substantial profits to help ensure the accuracy of medical information shared through social media, you have apparently delegated responsibility to people incompetent in carrying out this crucial task. ..."

Facebook Oversight Board. The Oversight Board is a body that makes consequential precedent-setting content moderation decisions (see Table of decisions) on the social media platforms Facebook and Instagram. Facebook CEO Mark Elliot Zuckerberg approved the creation of the board in November 2018, shortly after a meeting with Harvard Law School professor Noah Feldman, who had proposed the creation of a quasi-judiciary on Facebook. ...

Reuters.com Special Report - "Hatebook" - 2018-08-15: Why Facebook is losing the war on hate speech in Myanmar. WInside Facebook's Myanmar operation. Reuters found more than 1,000 examples of posts, comments and pornographic images attacking the Rohingya and other Muslims on Facebook. A secretive operation set up by the social media giant to combat the hate speech is failing to end the problem.

In April 2018, Facebook founder Mark Zuckerberg told U.S. senators that the social media site was hiring dozens more Burmese speakers to review hate speech posted in Myanmar. The situation was dire. Some 700,000 members of the Rohingya community had recently fled the country amid a military crackdown and ethnic violence. In March 2018, a United Nations investigator said Facebook was used to incite violence and hatred against the Muslim minority group. Facebook, she said, had "turned into a beast."

Four months after Mark Zuckerberg's pledge to act, here is a sampling of posts from Myanmar that were viewable this month [2018-08] on Facebook. One user posted a restaurant advertisement featuring Rohingya-style food. "We must fight them the way Hitler did the Jews, damn kalars!" the person wrote, using a pejorative for the Rohingya [kalar: a Burmese term for Burmese Indians]. That post went up in December 2013. Another post showed a news article from an army-controlled publication about attacks on police stations by Rohingya militants. "These non-human kalar dogs, the Bengalis, are killing and destroying our land, our water and our ethnic people," the user wrote. "We need to destroy their race." That post went up last September, as the violence against the Rohingya peaked. A third user shared a blog item that pictures a boatload of Rohingya refugees landing in Indonesia. "Pour fuel and set fire so that they can meet Allah faster," a commenter wrote. The post appeared 11 days after Zuckerberg's Senate testimony.

The remarks are among more than 1,000 examples Reuters found of posts, comments, images and videos attacking the Rohingya or other Myanmar Muslims that were on Facebook as of last week [2018-08]. Almost all are in the main local language, Burmese. The anti-Rohingya and anti-Muslim invective analyzed for this article - which was collected by Reuters and the Human Rights Center at UC Berkeley School of Law - includes material that's been up on Facebook for as long as six years.

The poisonous posts call the Rohingya or other Muslims dogs, maggots and rapists, suggest they be fed to pigs, and urge they be shot or exterminated. The material also includes crudely pornographic anti-Muslim images. The company's rules specifically prohibit attacking ethnic groups with "violent or dehumanising speech" or comparing them to animals. Facebook also has long had a strict policy against pornographic content.

The use of Facebook to spread hate speech against the Rohingya in the Buddhist-majority country has been widely reported by the United Nations and others. Now, a Reuters investigation gives an inside look at why Facebook has failed to stop the problem.

For years, Facebook - which reported net income of $15.9 billion in 2017 - devoted scant resources to combat hate speech in Myanmar, a market it dominates and in which there have been regular outbreaks of ethnic violence. In early 2015, there were only two people at Facebook who could speak Burmese reviewing problematic posts. Before that, most of the people reviewing Burmese content spoke English.

[ ... snip ... ]

[NPR.org, 2022-02-23] Facebook fell short of its promises to label climate change denial, a study finds.

Facebook is falling short on its pledge to crack down on climate misinformation, according to a new analysis from a watchdog group. The platform - whose parent company last year rebranded as Meta Platforms, Inc. - promised last May [2021-05] that it would attach "informational labels" to certain posts about climate change in the United States and some other countries, directing readers to a "Climate Science Information Center" with reliable information and resources. (Facebook launched a similar hub for COVID-19 information in 2020-03).

But a new report released Wednesday [2022-02-23 | local copy] from the Center for Countering Digital Hate finds that the platform only labeled about half of the posts promoting articles from the world's leading publishers of climate change denial. "By failing to do even the bare minimum to address the spread of climate denial information, Meta is exacerbating the climate crisis," said Center for Countering Digital Hate Chief Executive Imran Ahmed [local copy]. "Climate change denial - designed to fracture our resolve and impede meaningful action to mitigate climate change - flows unabated on Facebook and Instagram."

The British watchdog group [Center for Countering Digital Hate]> says that all of the articles it analyzed were published after 2021-05-19 - the date that Facebook announced it would expand its labeling feature in a number of countries. Facebook says it was still testing the system at the time. "We combat climate change misinformation by connecting people to reliable information in many languages from leading organizations through our Climate Science Center and working with a global network of independent fact checkers to review and rate content," Facebook spokesperson Kevin McAlistersaid in a statement provided to NPR. "When they rate this content as false, we add a warning label and reduce its distribution so fewer people see it. During the time frame of this report, we hadn't completely rolled out our labeling program, which very likely impacted the results."

Researchers examined posts with false information about climate change

The Center for Countering Digital Hate published a report [report | local copy] in 2021-11 finding that 10 publishers - labeled "The Toxic Ten" - were responsible for up to 69% of all interactions with climate denial content on Facebook. They include Breitbart News, the Federalist Papers [actually: The Federalist ?] Newsmax, and Russian state media. Researchers used the social analytics tool NewsWhip to assess 184 articles containing false information about climate change, published by "The Toxic Ten" and posted on Facebook, where they collectively accumulated more than 1 million interactions.

[ ... snip ... ]

[NPR.org, 2022-01-11] Judge allows Federal Trade Commission's latest suit against Facebook to move forward.

The Federal Trade Commission (FTC)'s antitrust lawsuit against Facebook can proceed, a federal judge ruled on Tuesday [2022-01-11], delivering a major win for the FTC after its first attempt at targeting Facebook's alleged monopoly power was dismissed for lack of evidence. This time, however, the judge found that federal regulators have offered enough proof to argue that Facebook's acquisition strategy - particularly its takeover of Instagram and WhatsApp - is driven by a "buy or bury" ethos. In other words, that Facebook allegedly gobbles up competitors in order to maintain an illegal monopoly.

"Although the FTC may well face a tall task down the road in proving its allegations, the U.S. District Court believes that it has now cleared the pleading bar and may proceed to discovery," wrote United States District Court Judge James Boasberg, noting that "the FTC has now alleged enough facts to plausibly establish that Facebook exercises monopoly power in the market for personal social networking services."

Judge narrows the scope of the FTC's lawsuit ["Interoperability"]

While it is an overall win for the FTC, James E. Boasberg did narrow the scope of the lawsuit. He said the accusation that Facebook's policies around interoperability, which is the ability to smoothly move between competing social networks, cannot move forward. Boasberg said Facebook abandoned a key policy around interoperability in 2018.

[ ... snip ... ]

[NPR.org, 2022-01-06] Sister of slain security officer sues Facebook over killing tied to Boogaloo movement.

The sister of a federal security officer who was fatally shot while guarding a courthouse during George Floyd-related protests [George Floyd] has sued Facebook, accusing the tech giant of playing a role in radicalizing the alleged shooter. Dave Patrick Underwood, 53, was shot and killed on 2020-05-29 in Oakland, California. Authorities have charged suspected gunman Steven Carrillo with murder. Investigators say Steven Carrillo had ties to the far-right, anti-government boogaloo movement and that Steven Carrillo organized with other boogaloo supporters on Facebook.

In a suit filed on Thursday [2022-01-06] in California state court against Meta, Facebook's parent company, Angela Underwood Jacobs accused Facebook officials of being aware that the social network was being used as a recruitment tool for boogaloo adherents, yet Facebook did not take steps to stop recommending boogaloo-related pages until after Dave Patrick Underwood's death. The boogaloo movement is a collection of boogaloo extremists who claim to want to overthrow the U.S. government through a second civil war. Sometimes clad in Hawaiian T-shirts, the boogaloo movement is known to be heavily armed and is highly active online. Lawyers for Underwood Jacobs claim Facebook was negligent in designing a product "to promote and engage its users in extremist content" despite knowing that it could lead to potential violence. "Facebook, Inc. knew or could have reasonably foreseen that one or more individuals would be likely to become radicalized upon joining boogaloo-related groups on Facebook," the lawsuit states.

Federal investigators have said Steven Carrillo, an Air Force sergeant at the time of the shooting, used Facebook to communicate with other boogaloo supporters. On the same day as Dave Patrick Underwood was killed, Carrillo allegedly posted to a Facebook group that he planned to go to the George Floyd protests in Oakland to "show them the real targets. Use their anger to fuel our fire," he allegedly wrote. "We have mobs of angry people to use to our advantage," according to federal prosecutors. Authorities say Carrillo wrote that the protest was "a group opportunity to target the specialty soup bois," a phrase boogaloo adherents use to refer to law enforcement officials because of the "alphabet soup" of federal law enforcement acronyms. Angela Underwood Jacobs' suit contends that if Facebook altered its algorithm so that it was not recommending and promoting boogaloo groups, Carrillo may never had connected online with others in the extremist movement. "Facebook bears responsibility for the murder of my brother," Underwood Jacobs said. Facebook did not have an immediate response to the suit.

The lawsuit is the latest attempt to hold a Big Tech company accountable for real-world harm. Social media companies largely escape legal responsibility in such cases thanks to a law known as Section 230, which prevents online platforms from being held liable for what users post. There have been rare exceptions in attempting to advance lawsuits against tech companies, like when an appeals court found that Snapchat could be sued [Snapchat] for a feature that allegedly encouraged reckless driving.

Section 230 is a section of Title 47 of the United States Code enacted as part of the United States Communications Decency Act, that generally provides immunity for website platforms with respect to third-party content. At its core, Section 230(c)(1) provides immunity from liability for providers and users of an "interactive computer service" who publish information provided by third-party users: "No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider." ...

Eric Goldman, a professor at Santa Clara University Law School who studies Section 230, said Facebook will likely invoke the legal shield in this case, but he said the suit faces other hurdles, as well. "There have been a number of lawsuits trying to establish that Facebook is liable for how violent groups and terrorists used their services," Goldman said. "And courts have consistently rejected those claims because services like Facebook aren't responsible for harms caused by people using the service."

The lawsuit leans heavily on the Facebook Files [Facebook Files, Facebook Papers], a cache of internal company documents exposed in a series of stories by The Wall Street Journal. Among the allegations is that Facebook's algorithm promotes extremism, inflammatory and divisive content in order to keep users engaged and advertising dollars rolling in. Facebook researchers have estimated that the social network only catches between 3% and 5% of hate speech on Facebook.

In a statement, lawyers for Angela Underwood Jacobs said the Facebook Files revealed "Facebook's active role in shaping the content on its website as well as creating and building groups on the platform - activities that fall outside of the conduct protected by Section 230." Facebook has reportedly banned nearly 1,000 private groups focused on "militarized social movements" like the boogaloo movement. Facebook has previously acknowledged its role in militia-fueled violence. In 2020-08, Facebook CEO Mark Zuckerberg said it made an "operational mistake" in failing to remove a page for a militia group that called for armed citizens to enter Kenosha, Wisconsin. Two protesters were shot and killed there during demonstrations over the police killing of Jacob Blake [Shooting of Jacob Blake]. The same month [2020-08], Facebook said it took down 2,400 pages and more than 14,000 groups on Facebook started by militia groups.

[CNIL.fr, 2022-01-06] Cookies: the CNIL fines GOOGLE a total of 150 million euros and FACEBOOK 60 million euros for non-compliance with French legislation. Following investigations, the CNIL noted that the websites Facebook.com, google.fr, and youtube.com do not make refusing cookies as easy as to accept them. It thus fines FACEBOOK 60 million euros and GOOGLE 150 million euros and orders them to comply within three months.

[ProPublica.org, 2022-01-04] Facebook Hosted Surge of Misinformation and Insurrection Threats in Months Leading Up to 2021-01-06 Attack, Records Show. A ProPublica / Washington Post analysis of Facebook posts, internal company documents and interviews, provides the clearest evidence yet that the social media giant played a critical role in spreading lies that fomented the violence of 2021-01-06.

Facebook groups swelled with at least 650,000 Facebookposts attacking the legitimacy of Joe Biden's victory between Election Day [2020-11-03 | 2020 United States presidential election] and the 2021-01-06 siege of the U.S. Capitol [2021 United States Capitol attack], with many calling for executions or other political violence, an investigation by ProPublica and The Washington Post has found.

The barrage - averaging at least 10,000 posts a day, a scale not reported previously - turned the groups into incubators for the baseless claims supporters of then-President Donald Trump voiced as they stormed the United States Capitol, demanding he get a second term. Many posts portrayed Joe Biden's election as the result of widespread fraud that required extraordinary action - including the use of force - to prevent the nation from falling into the hands of traitors.

"LOOKS LIKE CIVIL WAR is BECOMING INEVITABLE !!!" read a post a month before the Capitol assault. "WE CANNOT ALLOW FRAUDULENT ELECTIONS TO STAND ! SILENT NO MORE MAJORITY MUST RISE UP NOW AND DEMAND BATTLEGROUND STATES NOT TO CERTIFY FRAUDULENT ELECTIONS NOW !"

Another post, made 10 days after the election, bore the avatar of a smiling woman with her arms raised in apparent triumph and read, "WE ARE AMERICANS!!! WE FOUGHT AND DIED TO START OUR COUNTRY! WE ARE GOING TO FIGHT...FIGHT LIKE HELL. WE WILL SAVE HER❤ THEN WERE GOING TO SHOOT THE TRAITORS!!!!!!!!!!!"

One post showed a American Civil War-era picture of a gallows with more than two dozen nooses and hooded figures [Ku Klux Klan] waiting to be hanged. Other posts called for arrests and executions of specific public figures - both Democrats and Republicans - depicted as betraying the nation by denying Trump a second term.

"BILL BARR WE WILL BE COMING FOR YOU," wrote a group member after William "Bill" Barr announced the United States Department of Justice had found little evidence to support Trump's claims of widespread vote rigging. "WE WILL HAVE CIVIL WAR IN THE STREETS BEFORE BIDEN WILL BE PRES."

Facebook executives have downplayed Facebook's role in the 2021-01-06 U.S. Capitol attack and have resisted calls - including from its own Oversight Board - for a comprehensive internal investigation. Facebook also has yet to turn over all the information requested by the congressional committee studying the 2021-01-06 attack. Facebook said it is continuing to negotiate with the committee.

The ProPublica/Post investigation, which analyzed millions of posts between Election Day [2020-11-03] and 2021-01-06 and drew on internal Facebook documents and interviews with former employees, provides the clearest evidence yet that Facebook played a critical role in the spread of false narratives that fomented the violence of 2021-01-06. Facebook's efforts to police such content - the investigation also found - were ineffective and started too late to quell the surge of angry, hateful misinformation coursing through Facebook groups - some of it explicitly calling for violent confrontation with government officials, a theme that foreshadowed the storming of the U.S. Capitol that day amid clashes that left five people dead.

[ ... snip ... ]

[CBC.ca, 2021-12-09] Facebook has a massive disinformation problem in India. This student learned firsthand how damaging it can be. Facebook has more than 300 million users in India, and disinformation runs rampant.

[Trust.org, 2021-12-07] Rohingya refugees sue Facebook for $150 billion over Myanmar violence. Rohingya refugees to sue Facebook for inaction against hate speech but Facebook says it is protected from liability under a U.S. internet law.

Rohingya refugees from Myanmar are suing Meta Platforms, Inc., formerly known as Facebook, for $150 billion over allegations that the social media company did not take action against anti-Rohingya hate speech that contributed to violence. A U.S. class-action complaint, filed in California on Monday [2021-12-06] by law firms Edelson PC and Fields PLLC, argues that the company's failures to police content and its platform's design contributed to real-world violence faced by the Rohingya community [Reuters.com Special Report, 2018-08-15: Why Facebook is losing the war on hate speech in Myanmar. Inside Facebook's Myanmar operation]. In a coordinated action, British lawyers also submitted a letter of notice to Facebook's London office.

Facebook did not respond to a Reuters request for comment about the lawsuit. Facebook has said it was "too slow to prevent misinformation and hate" in Myanmar and has said it has since taken steps to crack down on platform abuses in the region, including banning the military from Facebook and Instagram after the 2021-02-01 coup. A Myanmar junta spokesman did not answer phone calls from Reuters seeking comment on the legal action against Facebook.

In 2018, United Nations human rights investigators said the use of Facebook had played a key role in spreading hate speech that fueled the violence. A Reuters investigation that year, cited in the U.S. complaint, found more than 1,000 examples of posts, comments and images attacking the Rohingya and other Muslims on Facebook. Almost all were in the main local language, Burmese. The invective included posts calling the Rohingya or other Muslims dogs, maggots and rapists, suggested they be fed to pigs, and urged they be shot or exterminated. The posts were tolerated in spite of Facebook rules that specifically prohibit attacking ethnic groups with "violent or dehumanising speech" or comparing them to animals.

[ ... snip ... ]

[Vox.com, 2021-11-11] Facebook is quietly buying up the metaverse. Can Mark Zuckerberg M&A a new monopoly?

In corporate finance, mergers and acquisitions (M&A) are transactions in which the ownership of companies, other business organizations, or their operating units are transferred or consolidated with other entities. As an aspect of strategic management, M&A can allow enterprises to grow or downsize, and change the nature of their business or competitive position.

Of the many complaints about Facebook, one comes through consistently: It's just too big. Which is why some critics and regulators want to make it smaller by forcing Mark Zuckerberg to unwind major acquisitions, like Instagram. Mark Zuckerberg's response: Let's get bigger by buying more stuff. After slowing down briefly in 2018, the year the Facebook-Cambridge Analytica scandal erupted, Facebook has been steadily making large acquisitions - at least 21 in the last three years, per data service Pitchbook Data.

Many of the deals have been announced since December 2020, when the U.S. government first filed an antitrust lawsuit against the company, accusing it of maintaining an illegal monopoly in social networking by buying or crushing competitors. The original suit and a revised complaint are aimed at forcing Facebook to divest itself of both Instagram and WhatsApp.

In the past couple years, Facebook's appetite for deals has run the gamut from Giphy, which lets you place funny GIFs in your social media posts, to Kustomer, a business software company for Facebook's corporate clients. Most of them, though, have been concentrated in one area: gaming and virtual reality (VR). Which makes sense, since Zuckerberg has formally announced that gaming and virtual reality, bundled up in the expansive and hard-to-define rubric of "the metaverse," are the future of Facebook. Hence the company's name change to Meta [Meta Platforms, Inc.]. But what's more important is a promise that Facebook will move thousands of its employees into the effort, and plans to lose $10 billion on it this year alone, and much more "for the next several years."

The day after Facebook announced the name change, the company illustrated how it will spend some of that money: a deal to buy Within, the company co-founded by VR pioneer Chris Milk, best known for its Supernatural workout app. People familiar with the transaction say Facebook paid more than $500 million for the company. Other Metaverse-y deals announced this year include Unit 2 Games, which makes a "collaborative game creation platform" called Crayta; Bigbox VR, which makes a popular game for Facebook's Oculus virtual reality goggles; and Downpour Interactive, another VR game-maker.

Those deals were already raising eyebrows before Facebook formally announced that they represented the future of the company. So what should we think of them now? That is: If you think 2021 Facebook needs to be broken up, in part to undo deals from the past like Instagram ($1 billion, 2012) and WhatsApp ($19 billion, 2014), then shouldn't you also be worried about deals Mark Zuckerberg is doing now to build the 2031 version of his company?

[ ... snip ... ]

[theVerge.com, 2021-11-06] Facebook reportedly is aware of the level of "problematic use" among its users. Leaked documents show a team of internal researchers that suggested fixes was disbanded.

Facebook's own internal research found 1 in 8 of its users reported compulsive social media use that interfered with their sleep, work, and relationships - what the social media platform calls "problematic use" but is more commonly known as "internet addiction," the Wall Street Journal reported [refer here]. The social media platform had a team focused on user well-being, which suggested ways to curb problematic use, some of which were put into place. But the company shut down the team in 2019, according to the WSJ.

Pratiti Raychoudhury, a vice president of research for Meta Platforms, Inc., Facebook's new parent company, writes in a blog post that the WSJ misrepresented the research (a claim the company has made about some of the other articles the WSJ has produced based on Facebook's internal documents). She says the company "has been engaged and supportive throughout our multiyear effort to better understand and empower people who use our services to manage problematic use. That's why this work has taken place over multiple years, including now." Raychoudhury argues that "problematic use does not equal addiction," and that the company ships "features to help people manage their experiences on our apps and services."

The report is the latest in an ongoing series from The Wall Street Journal called the Facebook Files, based on internal documents provided by whistleblower Frances Haugen, which suggest Facebook is aware of the problems its platforms can cause. One set of reports, for instance, suggested Facebook knew that its Instagram platform was toxic for teenage users. Haugen testified before Congress on 2021-10-05, saying Facebook was "internally dysfunctional," and that it was unlikely to change its behaviors without action from external regulators.

[WSJ.com, 2021-11-05] Is Facebook Bad for You? It Is for About 360 Million Users, Company Surveys Suggest. The app hurts sleep, work, relationships or parenting for about 12.5% of users, who reported they felt Facebook was more of a problem than other social media. | Archive.today snapshot

[Vox.com, 2021-11-03] Facebook is backing away from facial recognition. Meta isn't. The social network is scaling back facial recognition, but similar technology could show up in the metaverse.

[Reuters.com, 2021-11-02] Facebook will shut down facial recognition system.

Meta Platforms, Inc. [formerly; Facebook, Inc.] announced on Tuesday [2021-11-02] it is shutting down its facial recognition system, which automatically identifies users in photos and videos, citing growing societal concerns about the use of such technology. "Regulators are still in the process of providing a clear set of rules governing its use," Jérôme Pesenti [Anglicized: Jerome Pesenti | local copy] Vice President of Artificial Intelligence at Meta, wrote in a 2021-11-02 blog post [local copy]. "Amid this ongoing uncertainty, we believe that limiting the use of facial recognition to a narrow set of use cases is appropriate."

The removal of face recognition by the world's largest social media platform comes as the tech industry has faced a reckoning over the past few years over the ethics of using the technology. Critics say facial recognition technology - which is popular among retailers, hospitals and other businesses for security purposes - could compromise privacy, target marginalized groups and normalize intrusive surveillance. IBM has permanently ended facial recognition product sales, and Microsoft Corporation and Amazon.com, Inc. have suspended sales to police indefinitely.

The news also comes as https://www.reuters.com/technology/changing-facebooks-name-will-not-deter-lawmaker-or-regulatory-scrutiny-experts-2021-10-20 has been under intense scrutiny from regulators and lawmakers over user safety and a wide range of abuses on its platforms. The company, which last week renamed itself Meta Platforms, Inc., said more than one-third of https://www.reuters.com/technology/changing-facebooks-name-will-not-deter-lawmaker-or-regulatory-scrutiny-experts-2021-10-20's daily active users have opted into the face recognition setting on the social media site, and the change will now delete the "facial recognition templates" of more than 1 billion people.

The removal will roll out globally and is expected to be complete by December 2021, a Facebook spokesperson said.

[ ... snip ... ]

[theIntercept.com, 2021-10-12] Revealed: Facebook's Secret Blacklist of "Dangerous Individuals and Organizations". Experts say the public deserves to see the list, a clear embodiment of U.S. foreign policy priorities that could disproportionately censor marginalized groups. | Facebook's Dangerous Individuals and Organizations (DIO) policy has become an unaccountable system that disproportionately punishes certain communities.

Newsweek.com, 2021-10-04] 1.5 Billion Facebook Users' Personal Information Allegedly Posted for Sale.

[Doctorow.medium.com, 2021-09-30] Facebook thrives on criticism of "disinformation". They'd rather be evil than incompetent. | [discussion, 2021-10-01+] Hacker News

[WashingtonPost.com, 2021-09-29] Recovering locked Facebook accounts is a nightmare. That's on purpose. Social media companies are juggling account security and recovery - and failing users in the process. | [Hacker News, 2021-10-19] How to get back into a hacked Facebook account.

[theAtlantic.com, 2021-09-27] The Largest Autocracy on Earth. Facebook is acting like a hostile foreign power; it's time we treated it that way.

[NPR.org, 2021-09-23] What Leaked Internal Documents Reveal About The Damage Facebook Has Caused. WSJ reporter Jeff Horwitz says Facebook executives often choose to boost engagement at the expense of tackling misinformation and mental health problems, which are rampant on their platforms.

[ProPublica.org, 2021-09-22] Facebook Grew Marketplace to 1 Billion Users. Now Scammers Are Using It to Target People Around the World. ProPublica identified thousands of Marketplace listings and profiles that broke the company's rules, revealing how Facebook failed to safeguard users.

[theMarkup.org, 2021-09-21] Facebook Rolls Out News Feed Change That Blocks Watchdogs from Gathering Data. The tweak, which targets the code in accessibility features for visually impaired users, drew ire from researchers and those who monitor the platform.

Facebook has begun rolling out an update that is interfering with watchdogs monitoring the platform. The Markup has found evidence that Facebook is adding changes to its website code that foils automated data collection of news feed posts - a technique that groups like NYU's Ad Observatory, The Markup, and other researchers and journalists use to audit what's happening on the platform on a large scale.

The changes, which attach junk code to HTML features meant to improve accessibility for visually impaired users, also impact browser-based ad blocking services on the platform. The new code risks damaging the user experience for people who are visually impaired, a group that has struggled to use the platform in the past.

The updates add superfluous text to news feed posts in the form of ARIA tags, an element of HTML code that is not rendered visually by a standard web browser but is used by screen reader software to map the structure and read aloud the contents of a page. Such code is also used by organizations like NYU's Ad Observatory to identify sponsored posts on the platform and weed them out for further scrutiny. ...